A team from Art-A-Hack™ 2018 Special Edition — City University of New York

Team members:

- Ellen Pearlman

- Cynthia O'Neill

- Danni Liu

- Doori Rose

- Sarah Ing

The Sentimental Feeling/Second Skin team created a proof of concept to answer the question “Can we analyze and then show the sentiment of your innermost thoughts?”

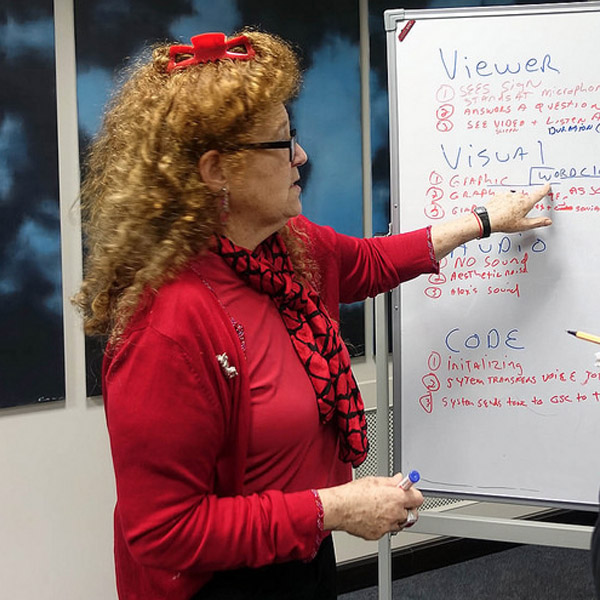

In their proof-of-concept performance, team lead Ellen Pearlman sits on stage wearing a brainwave headset. As other team members ask probing and personal questions, a custom-designed light necklace visualizes Ellen’s emotional responses as interpreted by the brainwave sensor.

At the same time, a text-to-speech algorithm interprets her words, and a sentiment analysis is run on those words. The sentiment interpreted via this approach is visualized by a projected graphic behind the team.

In this way, the audience can see the fluctuating connections between the performer’s brainwaves and the emotional analysis of her speech. The performance depicts the sterility of algorithmic decision making by AI entities in opposition to a sentient human’s emotions.

The performer’s brainwaves are visible on her body as a type of synthetic skin, body covering, or nervous system. This aims to highlight inherent tensions between implicit mathematical machine learning analysis (emotionally intelligent AIs) and all too human irrationality (brain computer interface).

The team began by prototyping different consumer mobile based EEG headsets (Muse, NeuroSky), and constructing portable LED light sculpted body wearables. They went on to write code for a cloud based Google API that analyzed sentiment, or feeling.

During the Art-A-Hack co-working sessions they were able to connect the EEG reading of attention, which displayed as the color magenta, and the EEG reading of meditation, which displayed as the color aqua. They synchronized these readings with the analysis of a subject’s verbal response to a question, either positive (a large projected circle) or negative (a small projected circle).

This established the feasibility of a proof of concept feedback loop between an emotionally intelligent AI and human brainwaves. It also sets up the ability to create, in the future, a database-driven AI ‘character’.

The project is a prototype for an Artificially Intelligent Brain Opera, a concept championed by team lead Ellen Pearlman. The two-character opera will have a live performer wearing an EEG headset that analyzes their emotional responses and its connected to a synthetic skin made of light.

The second character is an emotionally intelligent artificial intelligence entity database, who analyzes the humans emotional responses and replies from a mathematically derived formula that is augmented through machine learning.

In the future, AI entities will analyze human responses, whether verbal or biometric. These analyses will be tasked with making decisions based on algorithmic formulas. The potential for misuse of this type of information is high, and the pitfalls lack adequate means of redress.

This Art-A-Hack experiment is a first step towards developing an AI brainwave opera concerning these issues.